Qwen 2.5: the most open AI yet?

Alibaba’s bold move to redefine AI openness with over 100 models

Alibaba’s Qwen2.5 series has made waves in the AI community recently, debuting over 100 models at the Apsara Conference 2024.

Unveiled by Alibaba Cloud CTO Jingren Zhou, the release includes not only the core language model but also multimodal (Qwen2-VL) and specialized versions for coding (Qwen2.5-Coder) and math (Qwen2.5-Math).

A developer’s dream: 100+ models at once

To developers, Qwen has earned the nickname “Big Boy” for a reason. Alibaba Cloud has laid everything on the table: the best models, the widest range of sizes, and the most powerful specialized models, all open-sourced with no tricks or hidden catches.

Let’s look at the performance.

In benchmarks like MMLU-redux, the Qwen2.5-72B model, with only one-fifth of the parameters, challenges the massive Llama3.1-405B model and even surpasses it in some metrics. For independent developers, this is a game-changer: a high-performance model without the hefty resource demands of larger models. Now, developers can deploy large-scale applications with smaller, more efficient models at a fraction of the cost.

How did Qwen2.5 achieve such a leap?

According to sources close to GenAI Assembling, the entire Qwen2.5 series was pre-trained on a massive new dataset with up to 18 trillion tokens, resulting in an 18% performance improvement over Qwen2. The new models demonstrate stronger general knowledge, coding abilities, and mathematical reasoning.

For example, the Qwen2.5-72B model scores 86.8 on MMLU-rudex (general knowledge), 88.2 on MBPP (coding skills), and 83.1 on the MATH benchmark (mathematical abilities).

These models support up to 128K tokens in context length and can generate up to 8K tokens of content. They also handle over 29 languages, including Chinese, English, French, and Spanish.

Moreover, Qwen2.5 has made significant strides in areas like executing instructions, generating long-form text, understanding structured data, and producing structured outputs. It’s more adaptive to System prompts, enhances role-playing capabilities, and improves chatbot performance.

More than just general models: specialized models shine

Beyond the general models, Qwen2.5 includes specialized models. For example, Qwen2.5-Coder, designed for programming tasks, comes in 1.5B and 7B versions, with a 32B version in development. This coder model is trained on 5.5 trillion tokens of mixed source code and text data, ensuring strong performance on coding benchmarks, even with smaller sizes.

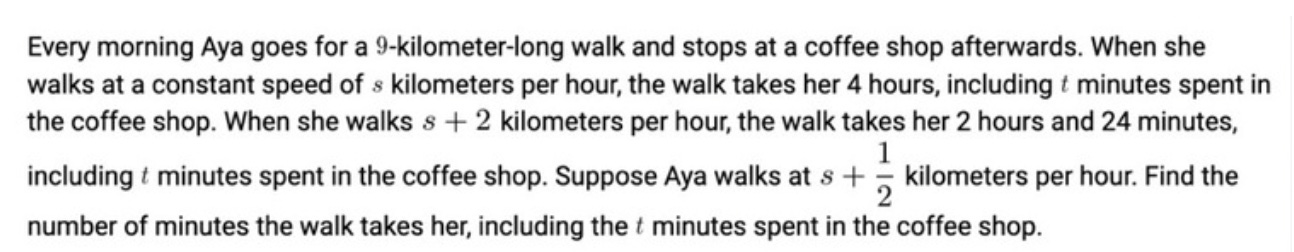

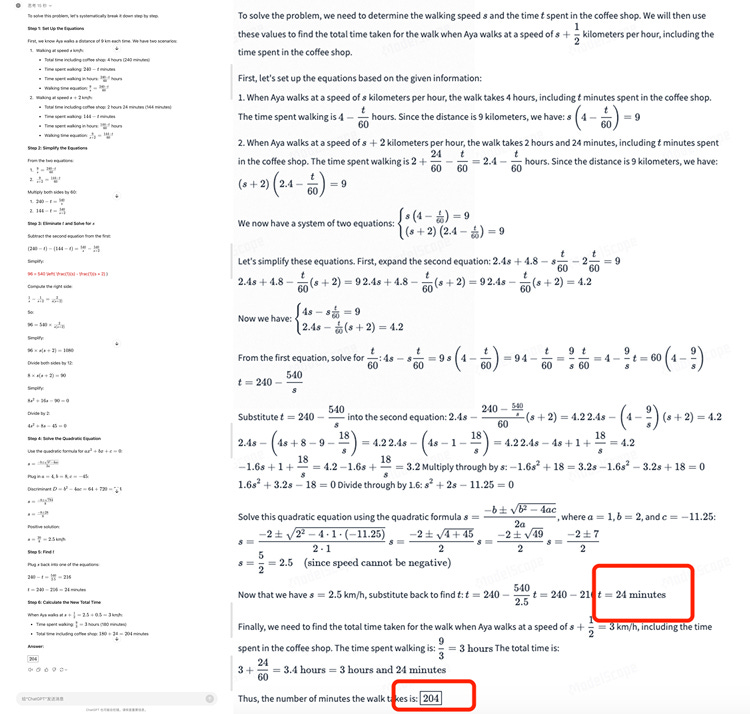

Qwen2.5-Math, another specialized model, offers versions at 1.5B, 7B, and 72B parameters. These models are trained on high-quality mathematical datasets and can solve complex math problems in both Chinese and English.

In a grueling test, Qwen2.5-Math was pitted against OpenAI’s latest o1 model on a difficult AIME problem. While o1 took 15 seconds to solve the problem, Qwen2.5-Math solved it in 29 seconds, with both arriving at the correct answer using different approaches.

The most open model ecosystem

Qwen’s open-source strategy is comprehensive. The Qwen2.5 language model is available in seven sizes: 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B, along with base, instruct (instruction-following), and quantized versions. Developers had been clamoring for models like the 32B and GGUF models, and Qwen delivered, open-sourcing GGUF, GPTQ, and AWQ quantized models to give developers more options and eliminate the need to wait for Llama.

In terms of multimodal capabilities, Qwen2-VL-72B is now open-sourced, bringing advanced vision-language abilities like recognizing images of varying resolutions, understanding videos over 20 minutes long, and performing autonomous operations on phones and robots.

Summing It Up:

Qwen2.5 offers a diverse range of models with vastly improved performance across different sizes, languages, and specialized tasks. On top of that, it adds impressive multimodal capabilities. It’s clear that Qwen2.5 is one of the most comprehensive and versatile open-source AI ecosystems available.

Open source, but are developers using it?

With so many models open-sourced, a crucial question arises: Are developers actually using them? According to Jingren Zhou, by mid-September 2024, Qwen models had been downloaded over 40 million times, and the global open-source community had created over 50,000 derivative models based on Qwen, making it second only to Llama in popularity.

This level of adoption isn’t just about dumping models onto the open-source community; it requires hard work and close collaboration with developers. From Qwen1.5 onwards, Tongyi’s team has partnered with HuggingFace, integrating Qwen into the HuggingFace Transformers library, simplifying its usage for developers. Additionally, the team has worked to ensure compatibility with various open-source frameworks, including vLLM, AutoGPTQ, and llama.cpp.

As Zhou explained, “We open-source so many models to give developers the flexibility to choose what works best for their use cases.” At the same time, Alibaba Cloud provides closed-source API options for enterprise customers, catering to their specific needs.

Final thoughts: open or closed? It’s all about the market

Alibaba Cloud’s unique approach lies in its balance between open-source and closed-source offerings. Unlike other companies like OpenAI, which remains closed-source, or Llama, which is fully open-source, Alibaba Cloud offers both. Zhou believes that whether a model should be open or closed isn’t a decision for AI companies to make—it should be determined by market demand.

As the debate over open vs. closed models rages on, one thing is clear: Tongyi Qianwen’s commitment to openness may keep it at the forefront of the AI industry for years to come.