Stanford duo creates AI model 4x faster than GPT-4o, and brings the "On-Device Hugging Face”

Tiny Model, Huge Impact

Months ago, Nexa AI has made waves in Silicon Valley’s AI community with the release of its powerful Octopus v2. This small but powerful AI model, boasting 0.5B parameters, has captured attention not just for its speed—four times faster than GPT-4o—but also for its efficiency, requiring only a single GPU card for inference. Octopus v2 delivers function calling capabilities that rival those of GPT-4, achieving an impressive accuracy exceeding 98%, all while being 140 times faster than traditional Retrieval-Augmented Generation (RAG) solutions.

The launch of Octopus v2 quickly brought Nexa AI into the spotlight. Named “No.1 Product of the Day” on Product Hunt, the model saw over 12,000 downloads on Hugging Face in its first month. Industry experts, including Hugging Face CTO Julien Chaumond and Figure AI founder Brett Adcock, have praised its rapid success.

Core tech: Functional Tokens

Founded by Stanford alumni Alex Chen and Zack Li, Nexa AI is a small but mighty team of eight full-time employees. Despite their size, they’ve secured contracts with over ten leading enterprises across industries like 3C electronics, automotive, cybersecurity, and fashion. Their momentum is further evidenced by a recent $10 million funding round.

At the core of their technology is the innovative Functional Token technology. Unlike traditional methods that rely on lengthy retrieval processes, their approach uses a single token to encapsulate all necessary function information—such as function names, parameters, and documentation—reducing the context length by 95%. This innovation allows for much faster processing times and makes Octopus v2 particularly well-suited for edge devices and mobile platforms where speed and efficiency are critical.

Building on the success of Octopus v2, Nexa AI quickly moved forward, releasing Octopus v3, a multimodal model under 1 billion parameters. This model extends the capabilities of its predecessor by supporting both text and image inputs across various edge devices, including Raspberry Pi. Additionally, Nexa AI introduced Octo-planner, a model specialized in executing multi-step tasks across different domains.

Model Hub: pioneering on-device AI

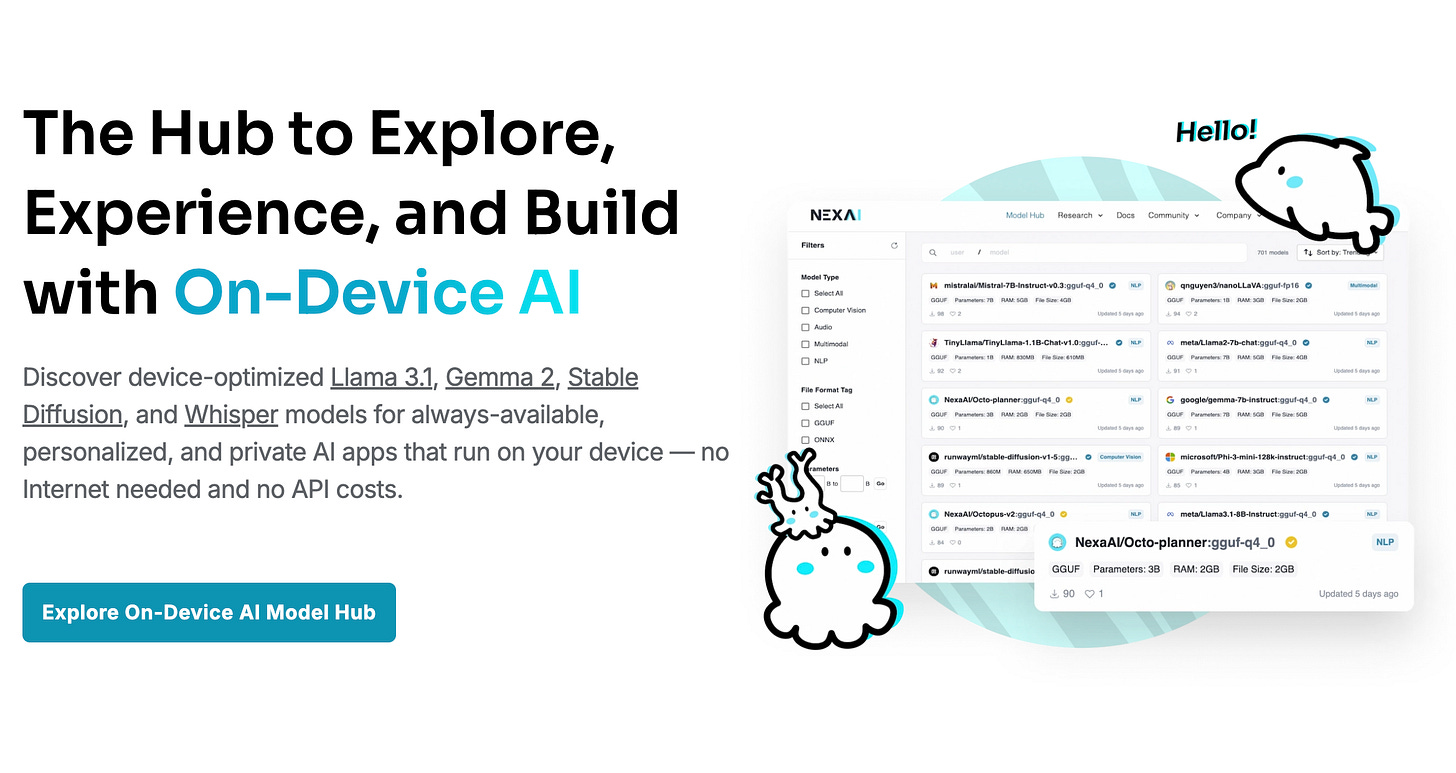

Nexa AI’s ambitions extend beyond individual models. They recently launched “Model Hub,” a comprehensive on-device AI development platform.

This platform includes a rich library of AI models optimized for local deployment, accompanied by an open-source SDK. Model Hub allows developers to deploy and fine-tune models on various devices without requiring an internet connection or incurring API fees, essentially offering an “on-device version of Hugging Face.”

Chen and Li also highlighted the advantages and challenges of working with small models. While large models excel across various subjects, small models can be optimized for specific domains, making them faster and more energy-efficient.

By deploying models on-device, Nexa AI can ensure user privacy and significantly reduce operational costs. They also discussed the competitive landscape, acknowledging the challenges posed by larger companies but emphasizing their unique approach to on-device AI, particularly their focus on function calling capabilities.

As Nexa AI continues to explore new possibilities in on-device AI, they’re gradually making their mark in the industry. From their early days at Stanford to their current work as innovators, Nexa AI’s focus on small models is helping them keep up with—and sometimes even outshine—their larger competitors.